To start, a disclosure: I run a company that develops and commercialises mobile applications for the prevention, detection and early treatment of common mental health conditions. The reason I do that is because I believe it is one of the few available ways to do those things in an affordable way and at scale. As such I am very interested in finding out what is the best way to develop those applications to make them engaging and effective. When I came across a meta-analysis by Joseph Firth and colleagues (Firth et al. 2017) that looks specifically at mobile interventions for anxiety disorders, I was immediately hooked.

Before I started reading I had some specific questions:

- If mobile apps work, do they work for all anxiety disorders?

- Can a digital intervention target anxiety in the context of other conditions?

- How important is it for a professional to guide the person with anxiety through the intervention?

- If people respond to the intervention, are there any markers that can help us tell them apart from non-responders, so we can offer the intervention in a targeted way?

- What characteristics of the mobile app make it a) effective and b) engaging?

These questions are important for clinicians who are trying to advise people with anxiety, which is why I want them all answered!

The authors justify the need for the meta-analysis by saying that anxiety disorders are extremely common and a great source of distress and disability for those who have them. They reflect that there are effective interventions, such as cognitive behavioural therapy, but that these can be hard to access for many and costly (certainly NHS waiting times are currently anything up to 18 months). The rapid adoption of smartphones in the general population represents an opportunity to reach many people in a way that is convenient to them and cost-effective.

Can digital interventions help people who are stuck on long waiting lists for talking treatments?

Methods

The authors followed the PRISMA statement to report their methods and results. I could not find much fault with their search strategy. They state they structured the search using the PICO framework and they searched from inception to November 2016. Apart from a fairly comprehensive list of research databases they also used Google Scholar. They do not state whether they registered their review prospectively. They searched only in English and only for randomised controlled trials. They selected for interventions that would run in whole or in part on a smartphone. They excluded studies in which the comparator was another smartphone application, but they did include studies that compared the digital intervention to medication. The intervention did not need to target anxiety specifically, but the studies included did have to include a measure of anxiety as one of the outcomes of interest. Three independent people checked studies against the selection criteria. When they did not agree on a paper, they discussed it until they made a joint decision.

They extracted information on study characteristics, the type of intervention, effects on a general measure of anxiety and effects on specific anxiety symptoms.

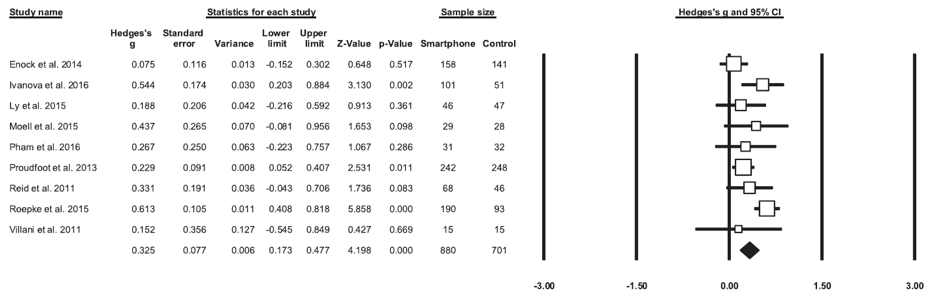

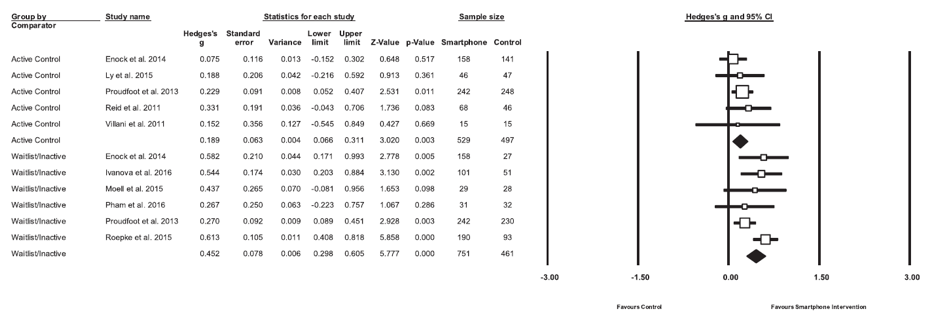

They measured and reported on heterogeneity (more on that later). The mean change scores in total anxiety between intervention and control were pooled to calculate the difference between conditions as Hedges’ g. They set significance at p<0.05. When they did not have a measure of general anxiety they used the scale for the specific anxiety disorder and used the same method for the meta-analysis. They assessed risk of bias using the Cochrane Collaboration’s tool. They applied sensitivity analysis to see if there were any changes when only including studies with complete data or intention to treat analysis. They assessed the possibility of publication bias by using Egger’s t-test. They used a ‘file draw analysis’ to calculate a ‘failsafe N’; the number of unpublished negative studies that would have to exist to invalidate the results of the meta-analysis. They used Duval and Tweedie’s trim-and-fill analysis to remove small studies with large effects from the positive side of the funnel plot.

Results

They found 8 studies that were eligible on research databases plus an additional one from Google Scholar. These represented 1,837 participants of which 960 received smartphone interventions. The mean age was 36.1 years and 34.8% were male. Four studies recruited from general populations, but used screening tools to select eligible participants. Three others recruited from clinical populations with depression, ADHD or ‘any mental health issue’. One study recruited oncology nurses.

Interventions lasted from 4-10 weeks. Four were entirely app-based. Others used laptop/desktop computers as well. One used the app to support face to face therapy.

The most common risk of bias was inadequate blinding.

Overall effect of apps on symptoms of anxiety

The meta-analysis found a small to moderate positive effect on total anxiety, but there was significant heterogeneity. There was no evidence of publication bias and the number of no-effect studies needed to invalidate the result was 75. Trim and fill analysis did not change the result. The effect sizes were larger in trials that used waitlist controls. The effects were small when compared to active control conditions.

Effect of apps specifically targeting anxiety

Of the four studies evaluated, one using diaphragmatic breathing only, did not show any difference versus waitlist controls. One showed no difference between stress inoculation training using videos and the control condition, using neutral videos (although there was a reduction in stress in both groups versus waitlist controls). One intervention using Cognitive Bias Modification versus neutral training and waitlist control showed benefits versus waitlist but no difference between the two other conditions. The last paper used acceptance and commitment therapy versus waitlist controls. People receiving the intervention were divided in two groups, one with automated reminders and the other one with active personalised guidance from a therapist. There was a significant effect of the intervention versus controls, but no difference between the guided/unguided conditions.

Effect of apps targeting overall mental health

These apps tended to use CBT and emotional self-monitoring to identify target areas to provide personalised advice and support. The first study found no difference between the active and neutral arms but there was an overall decrease in anxiety. The second study used a standard and enhanced versions of a wellbeing app. The enhancements included CBT and positive psychology features. There was a significant reduction of anxiety versus waitlist controls but not between the regular and enhanced versions. The last intervention used both a smartphone and computer-based elements to deliver a blend of CBT, problem solving and positive psychology modules. This intervention had moderate effects on anxiety versus both the active control and waitlist conditions.

Effects of apps targeting other conditions

Two studies looked at anxiety as a secondary outcome. The first one focusing on attention deficit hyperactivity disorder had effects on its main outcome measure but not on anxiety. The second intervention compared a face to face behavioural activation intervention for depression to a smartphone version of the same intervention. Both had the same positive effects on anxiety.

This review suggests that psychological interventions delivered via smartphones can reduce anxiety.

Conclusions

In general, these interventions appear to be well-tolerated and have small to moderate effects compared to waitlist controls. Most of the time when an active control was used, the effect of the intervention was much smaller, but after analysis it did not disappear altogether. The authors conclude that delivering treatments using smartphone devices is a promising and efficacious method for managing anxiety.

Actively engaging with a digital device for the purpose of reducing anxiety seems to have some beneficial non-specific effect. The authors do not label this as a ‘placebo effect’, but I think that is exactly what they are seeing. They discuss the importance of engagement as a key factor for efficacy and that future research should probably focus on that aspect. The authors remark on the fact that the majority of commercially available mental health apps do not adhere to evidence-based practice or have demonstrated efficacy.

Going back to my original questions, the apps evaluated do seem to work in a variety of anxiety disorders and can in some cases target anxiety in the context of other conditions, namely depression in this case. In the one study that evaluated guided personalised support versus automated prompts there was no difference between those two conditions. None of the studies looked at baseline characteristics that may predict who is likely to be a responder. While engagement was not directly evaluated by any of the studies, efficacy seems to be uniform across methodologies. If there is one study that showed perhaps a stronger effect, it’s Proudfoot et al (2013), which used a combination of CBT, positive psychology and problem solving modules.

My take home message is that these interventions are already showing beneficial effects and do solve the availability problem, but this technology is still in its infancy and we need to establish what are the best evidence-based techniques to adapt to this format, who can best benefit from them, and how can we best ensure consistent engagement.

How can we ensure consistent engagement with digital apps?

Links

Primary paper

Firth J, Torous J, Nicholas J, Carney R, Rosenbaum S, Sarris J. (2017) Can smartphone mental health interventions reduce symptoms of anxiety? A meta-analysis of randomized controlled trials. J Affect Disord. 2017 Aug 15;218:15-22. doi: 10.1016/j.jad.2017.04.046. Epub 2017 Apr 25.

Other references

Proudfoot J, Clarke J, Birch M, Whitton AE, Parker G, Manicavasagar V, Harrison V, Christensen H Hadzi-Pavlovic D. (2013) Impact of a mobile phone and web program on symptom and functional outcomes for people with mild-to-moderate depression, anxiety and stress: a randomised controlled trial. BMC Psychiatry 2013 13:312 DOI: 10.1186/1471-244X-13-312

Photo credits